Prof. ChatGPT and Elsevier: ChatGPT as the Author of a Research Paper

Now we have a new researcher in the house

✉ Dear Readers,

Sorry for delaying this post by a whole day. I needed to fact check the below article and its related sources. News and events such like the one below are trendy by nature and filled with hype. Thus, it becomes crucial for me to not loose the generality and make sure that the actual datas, infos and sources are reliable.

Anyways, let’s continue

In a hurry? Listen to the Audio Version of the Post

Now this is pretty insane.

Elsevier, a famous leading publisher of scientific and technical research, has made ChatGPT a Journal Author. Moreover, along with Elsevier, Cambridge University Press seems to also allow the use of ChatGPT for academic writing. Now, this indicates that these publications somehow recognised an A.I. tool as an ‘author’ rather than a research tool.

This has caused a buzz in the A.I. community, leaving everyone sceptical towards this. While many lauded this and celebrated it as a milestone for Generative AI in research, on the other hand, conservative researchers (as I like to say) argue that an A.I. ‘tool’ should not be credited as an author for a research journal.

ChatGPT as Co-Author

Have a look at this journal editorial from Elsevier -

This was published in the “Nurse Education in Practice” journal. This could be the first time when an A.I. tool got its own identity as an ‘author’ for a research paper.

And wait, there’s more.

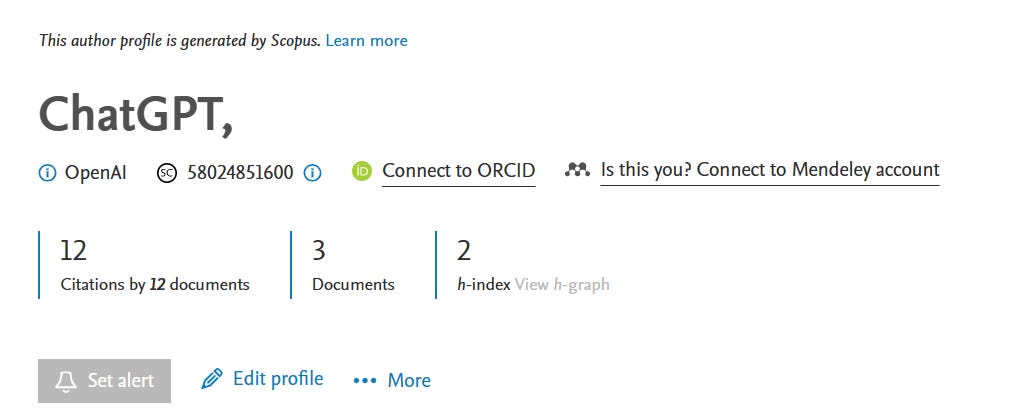

I tried going in deep and found out more about this “author”, and here’s what I found:

It turns out that this “researcher” who is affiliated with OpenAI has already published three documents. And it received more than 12 citations. This is incredible for an Author that is not ‘living’ in the first place.

The documents that ChatGPT authored are as follows -

Open artificial intelligence platforms in nursing education: Tools for academic progress or abuse? (by O'Connor, S., ChatGPT)

ChatGpt: Open Possibilities ( by Aljanabi, M., Ghazi, M., Ali, A.H., Abed, S.A., ChatGpt)

Towards Artificial Intelligence-Based Cybersecurity: The Practices and ChatGPT Generated Ways to Combat Cybercrime (by Mijwil, M.M., Aljanabi, M., ChatGPT)

And these two are the publication(or journals) that these research papers were listed-

Iraqi Journal for Computer Science and Mathematics

Nurse Education in Practice

I will leave the links to these in the end so that you can too check them as well. And this is in fact the actual research paper co-authored by ChatGPT.

Fascinatingly though, in each of these research, the subject matter is related to Artificial Intelligence, and one of the authors - chatGPT - is the subject matter itself. It's like how a scientific instrument that you are researching about is itself an author.

But hearing this is not new for me though, really. Why? Because I have seen countless essays about “how can A.I. be controlled” and “can A.I. replace my job” written by ChatGPT itself. So I am used to the ‘ironicity’ of such instances.

Is our Professor ChatGPT worthy enough?

This could be a huge leap in the field of generative A.I. (maybe). But A.I. can be a great tool to help you with your research. From time to time, I have emphasised that while A.I. can ‘help’ in your research, it shouldn't ‘do’ the research for you. Why? Simple, it is not reliable enough.

The recent A.I. advancement after the release of GPT-4 still has issues - like its tendency to hallucinate and spread misinformation. Thus while it can be a good aid to your research ventures, it cannot be fully relied upon and trusted. At least not now.

But I don't feel right about this - and here’s why -

No doubt it’s a big moment - an A.I. getting recognised as an author, but doesn’t it feels that making ChatGPT an author is violating the basic criteria of being an author for the journal? Which - obviously - was for humans.

To tell you what I really mean, have a look at this Authorship Criteria page that Elsevier issues -

According to this, we have four criteria that an author must satisfy to become an ‘author’.

Substantial contribution to the study conception and design, data acquisition, analysis, and interpretation.

Drafting or revising the article for intellectual content.

Approval of the final version.

Agreement to be accountable for all aspects of the work related to the accuracy or integrity of any part of the work

I want to emphasize these specific points - Approval of the final version, and Agreement to be accountable for … accuracy or integrity of any part of the work.

And these are the third and fourth criteria in their “ethics” page is where, quite frankly, we have a problem.

Should ChatGPT, or any other A.I. for that matter, have the power to “approve” a paper? In my opinion - No. And furthermore, is an A.I. credible enough to be accountable for the “accuracy” and integrity of ‘any part of the work’? It's no brainer - of course not.

See, these research papers, in the end, are made BY humans FOR humans. And as far as A.I. is concerned, they (or it - chatGPT) have no actual use for these papers, besides training their data. But that too, in the end, is done by humans - feeding the data, tweaking the A.I. to have more accurate predictions, and making them better. Researchers find good data sets and feed them into the AI to get better predictions.

Better the data - Better the predictions. This is one of the core principles of Machine Learning.

But A.I. can’t take the accountability for “accurate” results - No matter how sophisticated data you use, and no matter what the total magnitude of data you use (since people seem to believe that more data equals more accuracy, which is certainly not true). It won't take you two or three essays from Garry Marcus to realise how messed up the ‘accuracy’ part is there in current hyper-intelligent models.

Conclusion

And for years beyond, I don't think A.I. would be accurate enough to hold accountable for the errors in a paper. But that is for the future to see. And while existing ai models are getting better and better, it would take decades for these models to be the best.

For now, I leave you with this question to ponder -

Without touching on the moral and ethical aspects of this event, does this seem right for an AI to be titled as an author for a research paper?

See you in the next post.

Links to these papers -

ChatGpt: Open Possibilities | Iraqi Journal For Computer Science and Mathematics (doi.org)

Disagree completely. ChatGPT does not have the capacity neither the responsibility to be the author of a scientific paper.